Introduction

With the rising popularity and fast moving improvements of Large Language Models (LLMs), people are clamoring to find a way to custom tailor their responses. LLMs are trained on curated datasets that only contain information up to a certain point in time. For example, at the time of writing this, Chat GPT has no idea about the new iPhone specs! LLMs also get watered down with the vast amount of data it is trained on. If you are looking for a specific cookie recipe, a general purpose LLM would be trained on hundreds or thousands of different recipes. It might still regurgitate a reasonable recipe, but chances are that it will not be exactly what is expected.

The Solution

This is where datastores come in handy. LLMs place heavy weight for inference on tokens in its context. Instead of asking it to answer a question about our generic cookie recipe, we can tell it what our recipe is, and then ask the question.

Let’s start digging into the technical details (and by technical, I basically mean natural language). When interacting with an LLM, our request is typically referred to as a prompt.

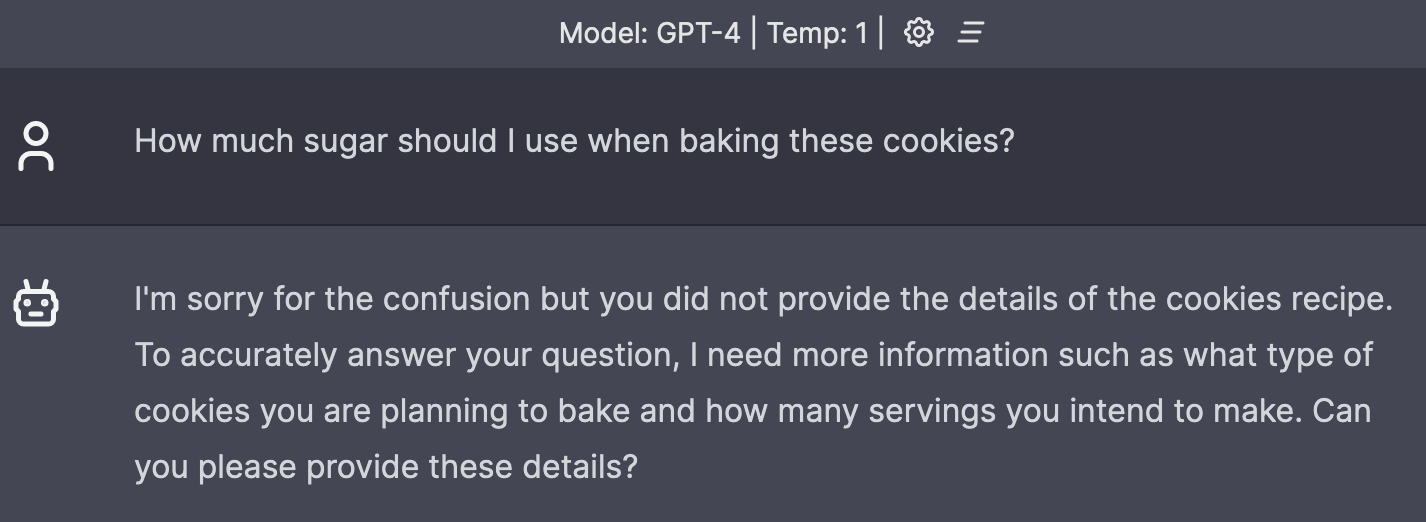

For this example, let’s say we own a repository of cooking recipes and want our users to be able to ask a chatbot about the recipe they are viewing. Here is an example of a prompt our user might want to ask:

How much sugar should I use when baking these cookies?

And here is the response using a web UI to interact with the LLM as a chatbot:

As we can see, the LLM did not know what kind of cookie we are interested in baking, or how many cookies we need.

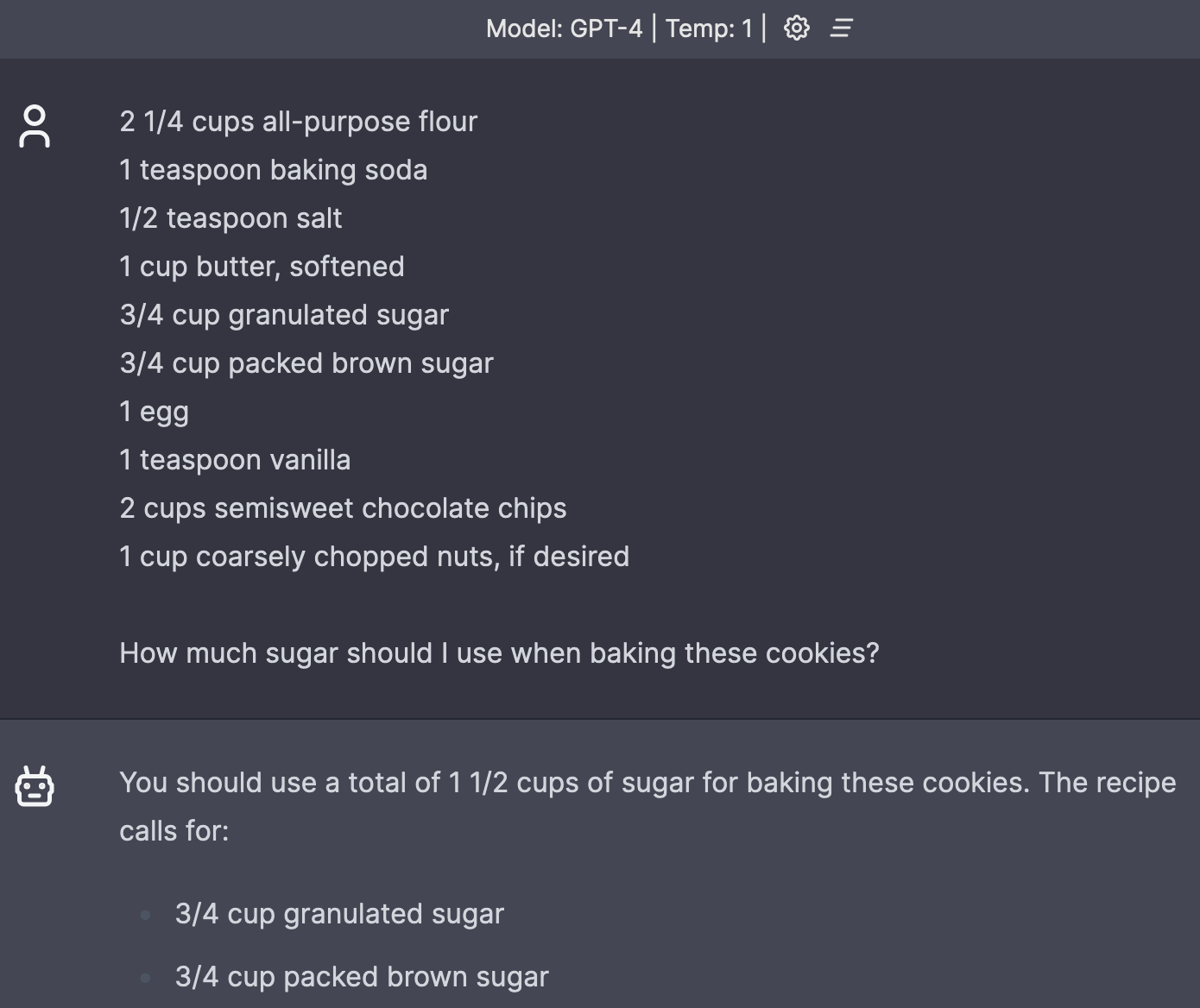

If we take advantage of context in the prompt. The LLM should be able to answer our question accurately. Let’s start throwing in some string templating. This is what context would look like before and after our templating:

Before:

{RECIPE}

How much sugar should I use when baking these cookies?

After:

2 1/4 cups all-purpose flour

1 teaspoon baking soda

1/2 teaspoon salt

1 cup butter, softened

3/4 cup granulated sugar

3/4 cup packed brown sugar

1 egg

1 teaspoon vanilla

2 cups semisweet chocolate chips

1 cup coarsely chopped nuts, if desired

How much sugar should I use when baking these cookies?

And how does the LLM respond given the new information?

The LLM pulled the correct information. We did not specify what type of sugar we were asking about, and the LLM returned both!

That’s great, but we don’t want to be copying and pasting recipes all the time. This is where our datastore comes in, and the subject of our blog post, Retrieval Augmented Generation (RAG). If we know what information is relevant to our request, we can query our datastore for this information and inject the context directly into the prompt for our users.

The datastore could be any datasource. Vectorstores are currently very popular, as they use embeddings in the same way LLMs do in order to group text together by similarity. The truth is that vectorstores are not always the right answer (they are great and I still love them for their strengths). In our example, a relational database would be perfectly suitable. The user is on our website, we query the database to provide them a recipe, and we have an identifier we can use to pull the information and inject it into the context for them.

For completeness, here is an example python script (that I generated with an LLM) that would do this for you:

import psycopg2

from openai import OpenAI

client = OpenAI(

# defaults to os.environ.get("OPENAI_API_KEY")

# api_key="My API Key",

)

def get_recipe(name):

# Connect to your postgres server

conn = psycopg2.connect(

dbname='your-db-name',

user='your-username',

password='your-password',

host='your-host',

port='your-port',

)

cur = conn.cursor()

cur.execute(f"SELECT ingredients FROM recipes WHERE name = '{name}';")

results = cur.fetchone()

cur.close()

conn.close()

if results:

return results[0]

else:

return None

def ask_gpt3_about_recipe(recipe_name, question):

ingredients = get_recipe(recipe_name)

if ingredients is None:

return 'Cannot find such recipe'

prompt = f'''

RECIPE:

{ingredients}

QUESTION:

{question}

'''

# Execute the query.

chat = client.chat.completions.create(

model='gpt-3.5-turbo',

messages=[

{

'role': 'system',

'content': "You are an amazing baking assistant. You have access "

"to recipes in .txt files. You can answer questions about "

"these recipes and provide suggestions.",

},

{'role': 'user', 'content': prompt},

],

)

return chat.choices[0].message.content

if __name__ == '__main__':

answer = ask_gpt3_about_recipe(

'Chocolate Chip Cookies',

'How much sugar should I use when baking these cookies?',

)

print(answer)

And the output:

❯ python rag.py

You should use 3/4 cup of granulated sugar and 3/4 cup of packed brown sugar when baking these cookies.

Twist

Currently, OpenAI is beta testing this ability on their platform using assistants. You can upload documents and ask questions about them without managing the storage. Let’s dig into the technical side as this is brand new. Here is an example script that should work out of the box with v1.3 of OpenAI’s python sdk.

from time import sleep

from openai import OpenAI

client = OpenAI(

# defaults to os.environ.get("OPENAI_API_KEY")

# api_key="My API Key",

)

def ask_assistant():

file = client.files.create(

file=open("cookie-recipe.txt", "rb"), purpose='assistants'

)

assistant = client.beta.assistants.create(

name="Recipe Helper",

description="You are an amazing baking assistant. You have access to recipes in .txt files. "

"You can answer questions about these recipes and provide suggestions.",

model="gpt-4-1106-preview",

tools=[{"type": "retrieval"}],

file_ids=[file.id],

)

thread = client.beta.threads.create(

messages=[

{

"role": "user",

"content": "How much sugar should I use when baking these cookies?",

"file_ids": [file.id],

}

]

)

run = client.beta.threads.runs.create(

thread_id=thread.id, assistant_id=assistant.id

)

while run.status in ['in_progress', 'queued', 'requires_action']:

sleep(1) # no streaming support yet!

run = client.beta.threads.runs.retrieve(thread_id=thread.id, run_id=run.id)

messages = client.beta.threads.messages.list(thread_id=thread.id)

return messages.data[0].content[0].text.value

if __name__ == '__main__':

response = ask_assistant()

print(response)

And the output:

❯ python baking_assistant.py

When baking these cookies, you should use 3/4 cup granulated sugar and 3/4 cup packed brown sugar according to the recipe.

This is fairly straightforward, but there are some items to note here as well as some limitations.

Assistants have access to tools and files. Currently, the only tools available are retrieval (for our use case), code-interpreter, and function calling. Most familiar with OpenAI’s chat model APIs will recognize the messages array, but here it has the additional support of file_ids that will automate our context injection.

Assistants also operate on threads. This is where we will need to append our familiar message objects. This will allow us to have multiple separate threads with the same assistant.

When uploading a document, it will automatically be chunked, converted into an embedding, and stored in a vector store that OpenAI manages. This is distinct from our earlier example where we used a relational database, but we are able to achieve similar results here.

Limitations

Assistant runs are done asynchronous, and it is not currently possible to stream the response. In our example, we need to poll the run to see when it has completed. However, support for streaming is in the pipeline.

OpenAI will also be adding support for users to include images in their messages, as well as generate images using DALL*E

Maximum file size for files uploaded is 512MB and supports specific extensions. Total upload capacity for an organization is 100GB.

Further Testing

The assistant API with file retrieval worked very well when providing a single recipe in a single file. However, when trying to provide multiple labeled recipes in a single file, or many files with individual recipes, the assistant began to struggle. It often responded with something along the lines of:

It appears that the file you've uploaded may provide a recipe with the specific amount of sugar for making chocolate chip cookies.

However, the myfiles_browser tool is not able to access it directly. If you're looking for a general guideline, a typical recipe for

chocolate chip cookies might use anywhere from 1/2 cup to 1 cup each of granulated sugar and brown sugar, for a batch that makes about 24 cookies.

If you want the precise amount from your recipe, please re-upload the file in a format that's compatible with the myfiles_browser tool,

such as a text-based file (like .txt or .pdf), and I'll be able to give you the exact amount listed in your recipe.

Here are the different utf-8 encoded files that were provided:

Single recipe: cookie-recipe.txt

Chocolate Chip Cookies:

2 1/4 cups all-purpose flour

1 teaspoon baking soda

1/2 teaspoon salt

1 cup butter, softened

3/4 cup granulated sugar

3/4 cup packed brown sugar

1 egg

1 teaspoon vanilla

2 cups semisweet chocolate chips

1 cup coarsely chopped nuts, if desired

Multiple recipes: cookie-recipes.txt

Apricot Oatmeal Cookies:

2/3 cup granulated sugar

2/3 cup packed brown sugar

1/2 cup butter or margarine, softened

1/2 cup shortening

1 teaspoon baking soda

1 teaspoon ground cinnamon or cardamom

1 teaspoon vanilla

1/2 teaspoon baking powder

1/2 teaspoon salt

2 eggs

3 cups quick-cooking oats

1 cup all-purpose flour

3/4 cup chopped dried apricots

1/2 cup finely chopped pecans

Chocolate Chip Cookies:

2 1/4 cups all-purpose flour

1 teaspoon baking soda

1/2 teaspoon salt

1 cup butter, softened

3/4 cup granulated sugar

3/4 cup packed brown sugar

1 egg

1 teaspoon vanilla

2 cups semisweet chocolate chips

1 cup coarsely chopped nuts, if desired

3-Ingredient Sugar Cookies

1 cup butter, softened

1/2 cup granulated sugar

2 1/4 cups all-purpose flour

4 tablespoons holiday-colored nonpareils or coarse decorating sugar, if desired

All attempts to extract information when providing multiple files failed. The most interesting discovery when testing a single file with multiple recipes is in simply switching the prompt.

Before:

How much sugar should I use when baking chocolate chip cookies?

After:

How much sugar should I use when baking these cookies?

The assistant was able to access the file and accurately extract the answer for all the recipes:

The amount of sugar you should use when baking cookies depends on the recipe you are using. Here are the sugar amounts for three different cookie recipes:

1. For Chocolate Chip Cookies:

- 3/4 cup granulated sugar

- 3/4 cup packed brown sugar

2. For 3-Ingredient Sugar Cookies:

- 1/2 cup granulated sugar

3. For Apricot Oatmeal Cookies:

- 2/3 cup granulated sugar

- 2/3 cup packed brown sugar

Make sure to use the recipe corresponding to the type of cookies you wish to bake.

OpenAI will inject the content of small files directly into the prompt, but will resort to using vector search for longer documents to extract relevant context. This might be what we are experiencing here. It is difficult to know exactly without more details. What is the chunk size? What is the overlap between chunks? Pinecone (a hosted vectorstore product) has a great document explaining the different strategies and considerations for optimizing the vectorstore. OpenAI is planning to expose more of these options to developers in the future.

Conclusion

Retrieval Augmented Generation brings the capabilities of LLMs to another level with relevant information. It is very reliable and can be implemented from scratch with tried and true datastores.

The Assistant API from OpenAI ties all of these pieces together and makes it very easy to get started. There are some wrinkles to iron out, but they are moving quickly, and it is still only in beta!

References:

- https://platform.openai.com/docs/assistants/overview

- https://platform.openai.com/docs/api-reference/assistants

- https://github.com/openai/openai-python

- https://www.pinecone.io/learn/chunking-strategies/